Technofist

Python based Projects and training for Engineering Students in Bangalore, Python cse projects 2018-2019

At TECHNOFIST, we provide academic projects based on Python with the latest IEEE papers implementation. Below mentioned are the 2018 list and abstracts on the Python domain. For synopsis and IEEE papers, please visit our head office and get registered.

OUR COMPANY VALUES

Instead of Quality, commitment, and success.

OUR CUSTOMERS

Our customers are delighted with the business benefits of the Technofist software solutions.

IEEE 2018-2019 PYTHON BASED PROJECTS

Experience the future of technology in digital marketing with Python with high-quality academic projects and complete documents from our Expert Trainees. Here, we provide a Python 2018-2019 project list with abstract/synopsis. We also train students from basic project implementation to final project demos and final code explanations. This section consists of projects related to the Python 2018-2019 IEEE project list, so feel free to contact us.

FINAL YEAR PROJECT LIST

LATEST PYTHON BASED PROJECT ABSTRACT

| ID | Abstract |

|---|---|

| TEP001 | Data Mining Methods for Traffic Accident Severity Prediction – The growth of the population volume and the number of vehicles on the road cause congestion (jam) in cities, which is one of the main transportation issues. Congestion can lead to negative effects such as increasing accident risks due to the expansion in transportation systems. The smart city concept provides opportunities to handle urban problems and improve the citizens’ living environment. In this research, three classification techniques were applied: Decision trees (Random Forest, Random Tree, J48/C4.5, and CART), ANN (back-propagation), and SVM (polynomial kernel). |

| TEP002 | Enhancing the Naive Bayes Spam Filter Through Intelligent Text Modification Detection – Spam emails have been a chronic issue in computer security. To improve spam filters, we implemented a novel algorithm that enhances the accuracy of the Naive Bayes Spam Filter. Our Python algorithm combines semantic-based, keyword-based, and machine learning algorithms to improve accuracy effectively. |

| TEP003 | Job satisfaction and employee turnover: A firm-level perspective – This research discusses how companies can use personnel data combined with job satisfaction surveys to predict employee quits. It argues that delegating surveys to external consultants can help maintain employee anonymity while providing valuable insights for decision-making. |

| TEP004 | Medical decision-making diagnosis system integrating k-means and Naïve Bayes algorithms – This research work has developed a Decision Support System for Heart Disease Prediction using data mining techniques, namely, Naïve Bayes and K-means clustering algorithms, which help in better diagnosis of heart disease patients by evaluating various medical data. |

| TEP005 | Information extraction methods for text documents in a Cognitive Integrated Management Information System – This paper presents issues related to developing and evaluating information extraction methods performed by cognitive agents within integrated management information systems to improve decision-making based on unstructured knowledge. |

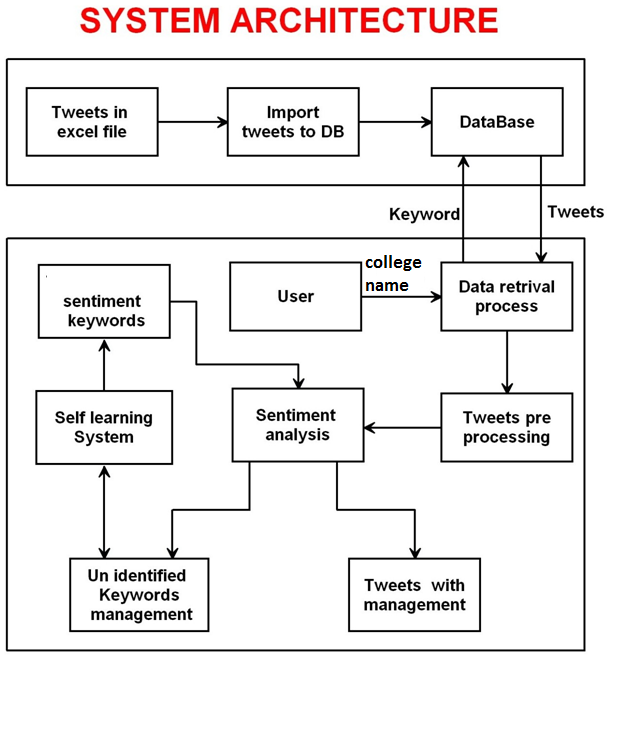

| TEP006 | Techniques for sentiment analysis of Twitter data: A comprehensive survey – This paper reviews various sentiment analysis techniques used for mining opinions expressed in Twitter data and categorizing them into positive, negative, or neutral sentiments, providing a process for classifying unstructured Twitter data. |

| TEP007 | Real-time vehicle detection and tracking – This research proposes an efficient method for detecting and tracking vehicles using a traffic surveillance system, which focuses on the motion trajectory of objects to enhance traffic management in complex environments, thereby improving the detection ratio. |

| TEP008 | A novelistic approach to analyze weather conditions and its prediction using deep learning techniques – This study aims to predict weather conditions based on past data features and design a model for forecasting future occurrences of events while providing accuracy for different models used. |

CONTACT US

For IEEE paper and full ABSTRACT

Phone: +91 9008001602

Email: [email protected]

Technofist provides the latest IEEE 2018-2019 Python Projects for final year engineering students in Bangalore, India. Python Based Projects with the latest concepts are available for final year ECE / EEE / CSE / ISE / telecom students.

ACADEMIC PROJECTS GALLERY